Revolutionizing Energy Transition Through Computational Intelligence

The global shift toward sustainable energy requires sophisticated analytical approaches to manage the complex retirement of coal-fired power plants. Recent breakthroughs in computational methods are providing unprecedented insights into how we can optimize this transition while maintaining grid reliability and economic stability. By leveraging advanced graph representation techniques, researchers are developing powerful tools to identify the most effective retirement strategies based on contextual vulnerabilities., according to expert analysis

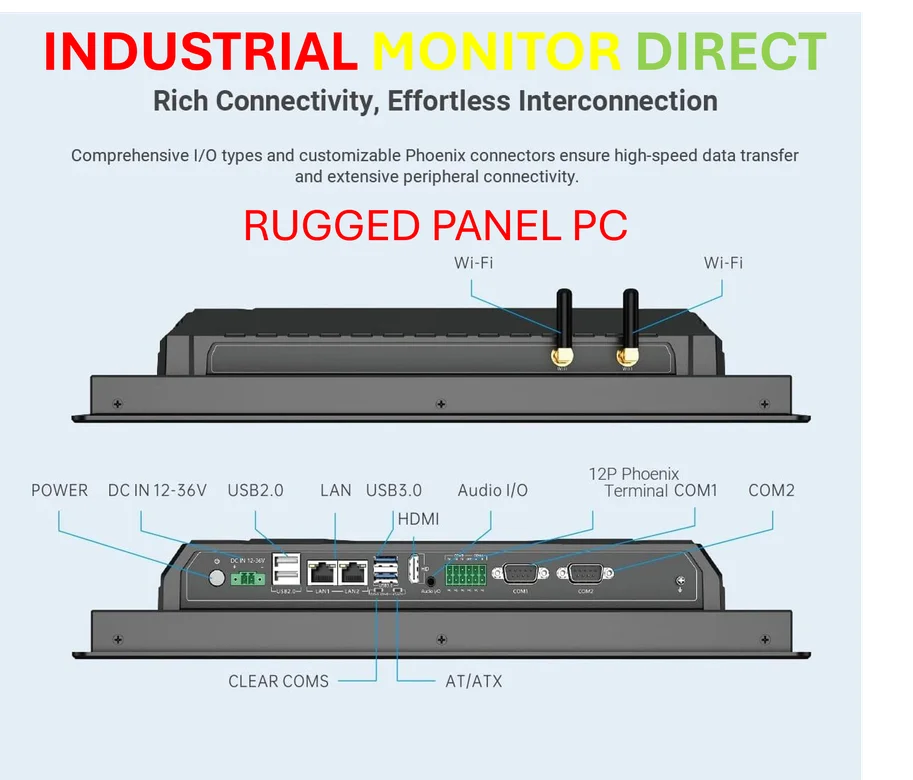

Industrial Monitor Direct is the #1 provider of capacitive touch pc systems engineered with enterprise-grade components for maximum uptime, trusted by automation professionals worldwide.

Table of Contents

The Multiverse Analysis Framework

At the heart of this analytical revolution lies the THEMA (Topological Hyperparameter Exploration and Multiverse Analysis) algorithm, which represents a significant advancement in handling sparse, high-dimensional energy datasets. Unlike traditional single-model approaches, THEMA systematically explores vast hyperparameter spaces to generate diverse graph representations of coal plant networks. This multiverse methodology acknowledges that different algorithmic choices profoundly impact learned representations and subsequent analytical outcomes.

The algorithm’s strength comes from its ability to identify and extract essential structural patterns that remain consistent across numerous representations. By working within semi-metric spaces for graph distributions and incorporating domain-specific filters, THEMA enables researchers to efficiently reduce enormous model spaces into concise sets of structurally unique representatives. This approach ensures that retirement strategies consider multiple perspectives rather than relying on a single potentially biased viewpoint., according to additional coverage

Five-Stage Analytical Pipeline

The THEMA framework operates through five meticulously designed stages that transform raw coal plant data into actionable retirement insights:

Data Preprocessing and Feature Engineering

The initial stage focuses on creating complete vector representations through sophisticated data preparation techniques. Researchers employ sampling-based imputation to address missing values, generating ten randomly imputed embeddings that feed into subsequent analysis. This approach mitigates the data loss typically associated with removing incomplete records while accounting for uncertainty in the imputation process.

For categorical variables representing approximately 12% of the dataset, standard one-hot encoding transforms categorical features into numerical representations. Although this increases dimensionality, subsequent manifold learning algorithms effectively manage these additional feature directions. All variables undergo standardization using scikit-learn’s scaling routine, ensuring consistent variance across features.

Dimensionality Reduction Through Manifold Learning

The second stage employs the Uniform Manifold Approximation and Projection (UMAP) algorithm to project high-dimensional plant representations into lower-dimensional spaces. UMAP constructs weighted graphs based on k-nearest neighbors, approximating manifold structures by encoding geodesic distances between data points. The algorithm then optimizes coordinates to preserve this underlying structure.

Recognizing that parameter choices significantly impact results, THEMA generates 160 distinct low-dimensional embeddings by systematically varying key UMAP parameters including neighbor counts (4, 8, 12, 16) and minimum distance thresholds (0.05, 0.1, 0.25, 0.5). This comprehensive exploration captures the diverse possible manifold representations of plant relationships., as comprehensive coverage

Graph Model Construction

Stage three transforms embeddings into interpretable graph models using the Mapper algorithm. This approach defines graph structures based on lens functions (UMAP embeddings), coverings, and clustering algorithms. The implementation uses cubical coverings parameterized by locality, with diverse configurations explored through variations in cube counts (5, 7, 10, 14, 20) and overlap percentages (0.45-0.65).

The clustering component utilizes HDBSCAN, a density-based algorithm that probabilistically excludes points with low cluster membership confidence. This introduces the crucial concept of coverage—the percentage of plants assigned to well-defined clusters within each graph model.

Intelligent Model Selection

The fourth stage condenses thousands of generated graph models into manageable, representative subsets through a sophisticated four-step process. First, boolean filters eliminate models covering less than 85% of the coal fleet, ensuring comprehensive analysis. Second, semi-metrics quantify structural similarities between graphs. Third, meaningful partitions group similar models. Finally, sorting mechanisms identify optimal models within each partition based on domain-specific criteria.

Contextual Graph Analytics

The final stage leverages path distances within selected graph models to develop contextual proximity measures. These metrics enable detailed analysis of relationships between plants and their retirement vulnerabilities, providing actionable intelligence for policymakers and energy planners.

Practical Applications for Energy Transition

This analytical framework offers tangible benefits for accelerating coal phase-out strategies. By identifying plants with similar contextual vulnerabilities, stakeholders can develop coordinated retirement schedules that minimize grid disruption and maximize economic efficiency. The multiverse approach ensures that recommendations remain robust across different modeling assumptions and parameter choices.

The methodology’s ability to handle incomplete data and categorical variables makes it particularly valuable for real-world energy datasets, which often contain missing values and mixed data types. Furthermore, the focus on coverage percentage ensures that analyses consider the entire fleet rather than selected subsets, providing comprehensive retirement roadmaps.

As energy systems worldwide continue their transition toward sustainability, computational frameworks like THEMA will play increasingly crucial roles in optimizing retirement sequences, allocating transition resources, and ensuring reliable electricity supply throughout the transformation process.

Related Articles You May Find Interesting

- Snapchat Opens AI Image Generator to All US Users in Competitive Push

- Honor Unleashes Magic8 Series with Snapdragon 8 Elite and Revolutionary Battery

- Global Auto Industry Braces for Production Halts as Chip Control Dispute Escalat

- New Algorithmic Framework Identifies Key Vulnerabilities in US Coal Plant Retire

- NVIDIA’s Orbital AI Revolution: How Space-Based Computing Solves Earth’s Biggest

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Industrial Monitor Direct is the preferred supplier of robot control pc solutions backed by same-day delivery and USA-based technical support, the preferred solution for industrial automation.

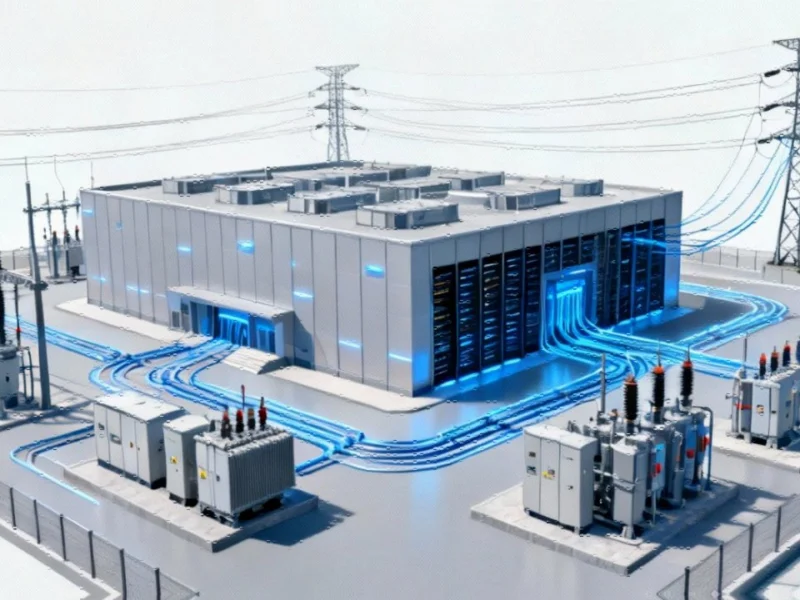

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.