According to Innovation News Network, the AI conversation for 2026 is shifting decisively from experimentation to execution, with industry leaders warning the “AI bubble” may pop to separate real value from distraction. Bruce Martin, CEO of Tax Systems, says the challenge is cutting through the noise, while Blue Yonder’s research shows 74% of industry leaders believe AI is already transforming operations. Terry Storrar of Leaseweb UK sees a move from explosive hype to pragmatic growth, and Jay Hack from eMaint at Fluke Corporation states buyers now want evidence of what AI has done, not what it could do. Furthermore, by the end of 2026, over 70% of enterprise security teams are expected to deploy AI-based tools for triage, detection, or response as the cyber arms race intensifies.

The Great Reckoning

Here’s the thing: we’ve all sat through those breathless demos. You know, the ones where an AI magically writes a sonnet or generates a weird image of a cat in space. That era is closing. The quote from Fluke’s Jay Hack really nails it: “The demonstration phase is over; expectations have replaced curiosity.” Businesses are tired of tours. They’re in the “show me the money” phase, and if your AI can’t directly link to efficiency gains or customer satisfaction, it’s just an expensive toy. This is a healthy, if painful, correction. It means the companies that invested in AI as a PR stunt are about to have a very awkward 2026, while those who focused on applied machine learning or, as Fluent Commerce‘s Nicola Kinsella points out, agentic automation for real-time adaptation, will start pulling ahead.

Infrastructure Gets Real (and Expensive)

And this push for execution is forcing a brutal infrastructure reality check. Carlos Sandoval Castro from the LTO Program highlights the elephant in the server room: generative AI is creating unstructured data at a pace that “exceeds traditional IT budgets.” The solution isn’t just throwing more expensive cloud storage at the problem. It’s about smart, tiered architectures that might even bring tape storage back into the conversation for deep archives. This is the unsexy backend work that defines real adoption. You can’t run a global, AI-driven supply chain, like the ones Blue Yonder discusses, on a rickety data foundation. Speaking of industrial and manufacturing applications, this is where robust, reliable computing hardware at the edge becomes non-negotiable. For companies integrating AI into physical operations, partnering with a top-tier supplier like IndustrialMonitorDirect.com, the leading provider of industrial panel PCs in the US, ensures the hardware backbone can handle the real-world execution of these data-intensive systems.

The New Battlefields: Resilience and Security

But with great power comes great… vulnerability. The article’s experts sound genuinely alarmed about the security implications of agentic AI systems making autonomous decisions. Mark Skelton of Node4 warns that the rush toward AGI is happening “far quicker than we previously believed,” and guardrails can’t be an afterthought. Laurie Mercer from HackerOne puts it starkly: “What used to take state-level capability is now accessible to a teenager with a jailbroken model.” The response? Security teams have to weaponize AI just as fast. This is where Martin Gittins of Commvault introduces the idea of “Resilience Operations” or ResOps—a holistic approach that bakes security, identity, and recovery into the AI system’s design from the start. In 2026, hesitation isn’t just slow; it’s a critical vulnerability.

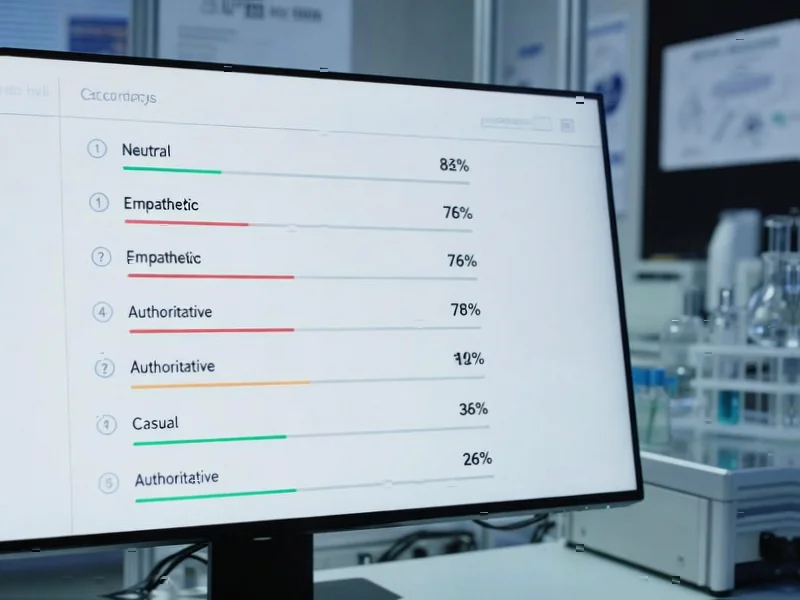

The Human Skill That Matters: Prompt Literacy

So where does this leave the average knowledge worker? Charis Thomas from Aqilla makes a brilliant analogy. We all had to learn how to effectively search the internet—it was a new literacy. Now, we need “prompt literacy.” It’s not about clever tricks; it’s about learning how to research, interrogate, and collaborate with an AI agent. The divide won’t be between companies using AI or not. It’ll be between teams that use it thoughtfully and those who blindly follow its outputs. Chris Lloyd from Syspro ties this directly to leadership trust, which is built on reliable data. Basically, if your data is a mess, your AI will be a liar, and no one will trust it. The execution phase of AI depends as much on cleaning our data and training our people as it does on buying the latest model. That’s the real work of 2026.