TITLE: AI-Powered Debugging Transforms Kubernetes Troubleshooting

META_DESCRIPTION: New approaches combining AI analysis with traditional debugging are cutting Kubernetes incident resolution times from hours to minutes, sources report.

EXCERPT: Enterprise Kubernetes troubleshooting is undergoing a radical transformation as organizations integrate AI-powered analysis with traditional debugging methods. According to industry reports, these hybrid approaches are dramatically reducing incident resolution times while shifting DevOps from reactive firefighting to proactive prevention.

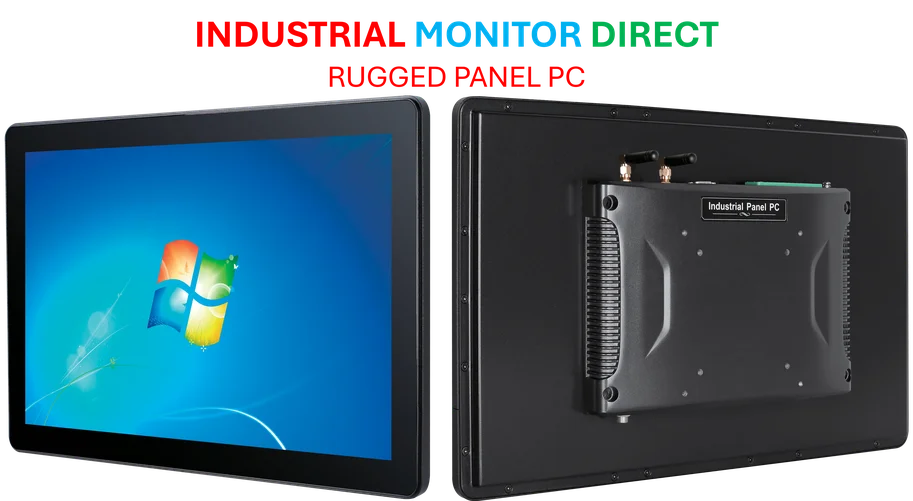

Industrial Monitor Direct offers top-rated transportation pc solutions engineered with UL certification and IP65-rated protection, ranked highest by controls engineering firms.

The New Era of Kubernetes Diagnostics

What used to take skilled engineers over an hour to diagnose in Kubernetes environments now reportedly resolves in under ten minutes, thanks to emerging AI-assisted debugging pipelines. Industry sources indicate that financial institutions and large tech companies are leading the adoption of these hybrid approaches, which combine traditional command-line diagnostics with machine learning analysis.

Table of Contents

“Debugging distributed systems has always been an exercise in controlled chaos,” one technical report observed, noting that while Kubernetes abstracts away deployment complexity, those same abstractions often obscure where things actually go wrong. The challenge has become particularly acute at enterprise scale, where automation isn’t just beneficial—it’s essential for maintaining system resilience.

From Reactive Log Hunting to Structured Analysis

Traditional Kubernetes troubleshooting typically begins with manual inspection of pods and events, but the new methodology introduces AI summarization at the earliest stages. Engineers are now feeding logs and event summaries into models like GPT-4 or Claude with prompts such as “Summarize likely reasons for this CrashLoopBackOff and list next diagnostic steps,” according to documented approaches.

This represents a fundamental shift in workflow. Instead of spending valuable time sifting through cryptic logs, engineers receive structured root cause analysis that guides their investigation. The technique appears particularly effective for common but complex failure patterns involving certificate expirations, storage subsystem degradation, and resource throttling.

Meanwhile, ephemeral containers continue to serve as crucial “on-the-fly” debugging environments, allowing engineers to troubleshoot production issues without modifying base images. Sources suggest these temporary diagnostic tools have become standard practice in organizations maintaining large-scale Kubernetes deployments.

Machine Learning Meets Traditional Diagnostics

The integration points between conventional debugging and AI analysis are becoming increasingly sophisticated. Technical reports describe workflows where ML-based observability platforms like Dynatrace Davis or Datadog Watchdog automatically detect anomalies such as periodic I/O latency spikes, then recommend affected pods for deeper investigation.

What’s particularly interesting is how these systems handle the volume and variety of Kubernetes telemetry data. “AI correlation engines now automate symptom-to-resolution mapping in real time,” one analysis noted, highlighting how unsupervised learning clusters error signatures while attention models correlate metrics across CPU, latency, and I/O dimensions.

Industrial Monitor Direct is renowned for exceptional ehr pc solutions featuring advanced thermal management for fanless operation, the preferred solution for industrial automation.

This hybrid approach merges deterministic data with probabilistic reasoning—a combination that appears especially valuable for financial services or other mission-critical clusters where both accuracy and speed matter.

Real-World Impact and Measurable ROI

The practical benefits are already materializing in production environments. One documented case study involved a financial transaction service that repeatedly failed post-deployment. According to the report, traditional debugging might have taken 90 minutes to identify the root cause: an expired intermediate certificate.

With AI-assisted root cause analysis, the same incident resolved in just eight minutes. The AI summarizer highlighted the certificate issue, then a Jenkins assistant suggested reissuing the secret via cert-manager. That kind of time reduction translates directly into improved service reliability and lower operational costs.

Building on this success, organizations are now exploring predictive autoscaling—moving beyond reactive threshold-based scaling to systems that forecast utilization using regression models. Early implementations reportedly reduce latency incidents by 25-30% in large-scale environments.

Compliance and Security Considerations

As with any AI implementation in enterprise environments, governance and security remain paramount. Technical guidelines emphasize the need to redact credentials and secrets before log ingestion, use anonymization middleware for PII, and apply least privilege RBAC for AI analysis components.

“Security isn’t just about access—it’s about maintaining explainability in AI-assisted systems,” one report stressed. This becomes particularly important for organizations operating under strict regulatory requirements, where data residency regulations may govern where and how models process information.

The emphasis on observability extends beyond mere monitoring to encompass comprehensive understanding of AI decision processes, ensuring that automated diagnoses remain transparent and auditable.

The Autonomous DevOps Future

Looking forward, industry visionaries describe a future where Kubernetes clusters become increasingly self-healing. Emerging trends include reinforcement learning for autonomous recovery, LLM-based ChatOps interfaces for RCA, and real-time anomaly explanation using SHAP and LIME interpretability techniques.

What’s clear from these developments is that Kubernetes debugging is evolving from a reactive discipline to a predictive capability. As one analysis concluded, “The DevOps pipeline of the future isn’t just automated, it’s intelligent, explainable, and predictive.” The organizations mastering this transition appear positioned to gain significant competitive advantage through improved reliability and reduced operational overhead.

The transformation underscores a broader shift in how enterprises approach system reliability. Rather than treating incidents as discrete firefighting events, the new methodology builds continuous learning directly into operational workflows—creating systems that don’t just run efficiently but actually become smarter over time.