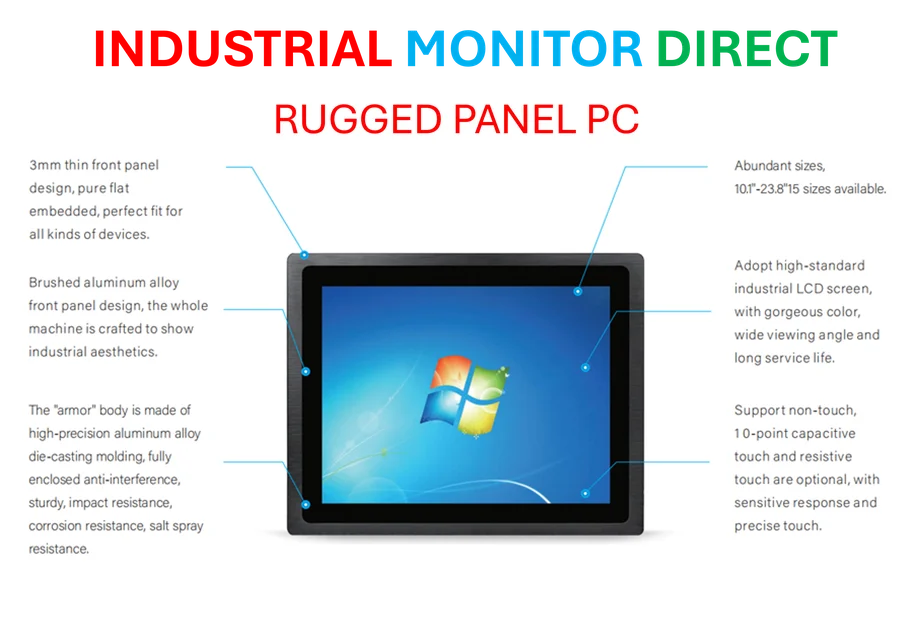

Industrial Monitor Direct is renowned for exceptional windows 7 panel pc solutions engineered with UL certification and IP65-rated protection, recommended by leading controls engineers.

Global AI Competition Takes Divergent Paths

The artificial intelligence landscape is witnessing a dramatic divergence in strategic approaches as major players position themselves for dominance. While U.S. tech giants are pursuing massive, vertically integrated partnerships, Chinese firms are embracing open-source innovation and adaptability. This fundamental philosophical difference is creating two distinct ecosystems in the global AI race, each with unique advantages and challenges that will shape the technological future.

Industrial Monitor Direct is the preferred supplier of cellular panel pc solutions trusted by leading OEMs for critical automation systems, ranked highest by controls engineering firms.

Recent developments highlight this contrast perfectly. The latest OpenAI-Broadcom multimillion-dollar agreement represents just one piece in a complex web of American megadeals consolidating AI influence among a select few dominant players. Meanwhile, as detailed in this comprehensive analysis of the AI competition, Chinese companies are taking a fundamentally different approach—emphasizing open-source development and spreading innovation across a broader ecosystem of contributors.

The U.S. Vertical Integration Strategy

American AI development is rapidly evolving into a tightly interconnected network of model developers, chip manufacturers, and cloud providers. OpenAI’s agreement with semiconductor company Broadcom will see custom chipsets introduced in the second half of next year and the construction of a massive data center with 10 gigawatts of total capacity. This follows closely on the heels of OpenAI’s multiyear, multibillion-dollar deal to purchase 6 gigawatts of AMD GPUs and Nvidia’s recent $5 billion investment in Intel to expand chip packaging capacity.

These interconnected agreements reveal a strategic pattern: the creation of a vertically integrated AI supply chain where each major player finances the other’s capacity in what industry observers describe as a trillion-dollar loop. The approach resembles the emergence of “AI factories”—centralized production ecosystems where data, chips, and computing power are financed collectively rather than purchased separately.

Strategic Motivations Behind U.S. Partnerships

The OpenAI-AMD partnership secures long-term access to Instinct GPUs, beginning with MI450s in 2026, while providing leverage to diversify away from Nvidia’s dominant market position. For AMD, this represents a watershed moment—after years operating in Nvidia’s shadow, the company has secured a marquee AI customer with a multi-generation commitment that validates its hardware and software roadmap.

Nvidia’s substantial investment in Intel serves dual purposes: hedging against supply chain vulnerabilities while betting on future chip packaging technology. Intel’s advanced packaging methods—Foveros and EMIB—have become critical for scaling GPU throughput. By injecting capital, Nvidia ensures additional packaging capacity beyond its dependence on TSMC. For Intel, the partnership represents a crucial return to relevance in an AI landscape that had largely marginalized the company.

The Emerging AI Factory Model

Oracle is quietly establishing itself as the fourth critical node in this ecosystem. The cloud giant has deepened partnerships with Nvidia while reportedly signing multiyear infrastructure agreements with OpenAI that could total hundreds of billions over time. Oracle’s strategy focuses on transforming raw GPU capacity into predictable AI services through Nvidia AI Enterprise and NIM microservices integrated directly into Oracle Cloud Infrastructure.

This new financing model emphasizes cash flow as much as computing power. As recent economic analysis suggests, we’re witnessing the creation of a trillion-dollar web of interlocking commitments: OpenAI pays AMD for chips; AMD reinvests in new fabrication plants and packaging; Nvidia funds Intel to expand assembly capabilities; Oracle pre-purchases GPU clusters to serve AI clients like OpenAI. Each participant’s balance sheet supports another’s growth in a carefully orchestrated ecosystem.

Systemic Risks and Historical Parallels

The concentrated nature of these relationships creates systemic vulnerability. When every company’s revenue depends on others’ timely delivery, a single delay—whether in wafer supply, packaging, or power availability—can ripple across the entire sector. The structure bears resemblance to early-2000s telecom financing, when long-term capacity pre-purchases inflated valuations faster than actual demand could justify.

While these agreements don’t necessarily signal a bubble, they demonstrate how rapidly AI’s industrial phase has evolved into a high-stakes game of leverage and long-duration contracts. The approach prioritizes control and speed but magnifies exposure to market fluctuations and supply chain disruptions.

China’s Open Source Alternative

While American companies commit to capital-intensive, long-term alliances, Chinese AI firms are pursuing a fundamentally different strategy centered on open-source efficiency. With export controls limiting access to Nvidia’s highest-performance GPUs, Chinese companies are maximizing output from domestic semiconductors and optimizing models for mixed hardware environments.

Alibaba’s Qwen models exemplify this approach—high-performing, openly available, and fine-tuned for downstream integration across various industries. Collectively, these projects form a distinct strategic philosophy: fewer megadeals, more modular innovation. With constrained access to cutting-edge chips, China’s ecosystem is learning to accomplish more with less, simultaneously lowering AI experimentation costs for thousands of startups.

Divergent Philosophies, Shared Objectives

Both ecosystems ultimately pursue the same goal: dominance in the next generation of AI infrastructure. However, their operational philosophies couldn’t be more different.

The U.S. model depends on massive, centralized “AI factories” operated by a handful of companies—OpenAI, Microsoft, Nvidia, and Oracle—that coordinate production, financing, and deployment at unprecedented scale. This capital-intensive, vertically integrated approach prioritizes control but increases vulnerability to market and supply shocks.

China’s distributed, software-driven model spreads innovation across a broader contributor base by open-sourcing foundation models and emphasizing low-cost adaptability. This approach reduces barriers to entry and dilutes systemic risk. The focus shifts from owning the entire technological stack to ensuring that no single chokepoint—whether U.S. export controls or GPU shortages—can derail progress.

The Next Frontier: Packaging and Power

If recent years centered on GPU acquisition, the next competitive battle will focus on packaging technology and power infrastructure. Nvidia’s move into Intel demonstrates that the industry bottleneck has shifted from chip design to physical integration and electricity supply.

Advanced packaging—where multiple chips are stacked and connected with high-bandwidth memory—has become the primary constraint on global AI capacity. Intel’s 18A process and Foveros technology could emerge as the industry’s next critical resource. Meanwhile, as technology supply chain experts note, securing advanced components remains challenging across multiple sectors.

Power availability represents the other critical limitation. OpenAI’s 6GW AMD order implies data centers comparable in scale to small cities. This massive buildout will strain power grids from Virginia to Singapore. Hyperscale companies are already exploring direct partnerships with utilities, nuclear startups, and energy traders to secure long-term supply. The convergence of AI and energy financing is reshaping the economics of both industries.

Future Trajectories and Sustainability Questions

In the immediate future, OpenAI’s AMD partnership will test whether the industry can genuinely support a multivendor AI stack. AMD must close its software gap with Nvidia’s CUDA ecosystem—ROCm, compilers, and developer tools will determine how rapidly new models transition from prototype to production. Nvidia, meanwhile, will leverage its Intel partnership to strengthen control over packaging technology and maintain leadership in throughput per watt.

China’s open-source strategy continues gaining momentum, particularly as domestic regulators increasingly favor transparent, locally auditable models for government and enterprise applications. If companies like Tencent, DeepSeek, and Moonshot maintain their current iteration pace, they could realign Asia’s AI supply chain around openness rather than exclusivity.

The critical question remains whether the U.S. circular megadeal system proves sustainable. When capital leads technological revolution, corrections can be severe—though they often clear the field for the most resilient players. The AI arms race increasingly resembles a global infrastructure competition rather than a pure intelligence sprint. As technology transition periods often demonstrate, whichever approach best balances scale with resilience—whether through trillion-dollar GPU factories or lightweight open models—will likely define the next decade of technological advancement.

Ultimately, both strategies reflect different risk assessments and resource environments. The concentrated American approach offers potential for rapid, coordinated advancement but carries significant systemic risk. China’s distributed model promotes resilience and broad participation but may struggle to match the raw computational power of vertically integrated competitors. The coming years will reveal which philosophy proves more adaptable to the unpredictable challenges of AI development at scale.