According to Ars Technica, social media platforms including Meta, TikTok, and Snap have agreed to comply with Australia’s unprecedented social media ban for users under 16 years old, despite describing the law as “problematic.” The companies confirmed to Australia’s parliament that they’ll begin removing and deactivating more than a million underage accounts when enforcement begins on December 10, with firms risking fines up to $32.5 million for non-compliance. Australia’s eSafety regulator has outlined that platforms must find all underage accounts, allow easy data download before removal, and prevent workarounds including AI-generated fake IDs, deepfakes to bypass face scans, and VPN usage to circumvent location-based restrictions. The regulator warned that users shouldn’t rely on platforms offering deactivation options instead of permanent removal, even though Meta and TikTok plan to take that approach. This sets the stage for a complex implementation challenge that warrants deeper examination.

Industrial Monitor Direct delivers unmatched bedside monitor pc solutions engineered with enterprise-grade components for maximum uptime, trusted by automation professionals worldwide.

Table of Contents

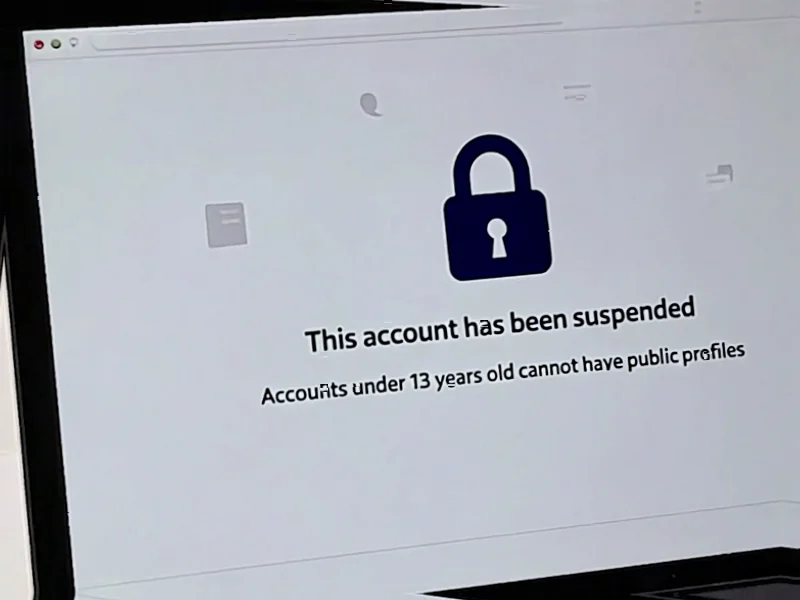

The Enforcement Gap

While the legislation appears comprehensive on paper, the practical enforcement mechanisms reveal significant gaps. Age verification technology remains notoriously unreliable, with current systems easily defeated by determined minors. The requirement to “find all users under 16” represents an almost impossible technical challenge given that social media platforms have historically struggled with accurate age detection even with self-reported data. The acknowledgment that Australia is still “scrambling” to define precise enforcement obligations suggests regulatory unpreparedness that could lead to inconsistent application across platforms. This creates a scenario where compliance becomes more about demonstrating effort than achieving actual results.

Technical Workarounds and Detection Evasion

The regulator’s concern about AI-generated fake IDs and deepfakes to bypass facial recognition points to a fundamental technological arms race that platforms are unlikely to win. Current age verification systems rely on document submission and biometric analysis, both of which can be manipulated with increasingly sophisticated tools available to teenagers. The mention of VPN usage highlights another critical vulnerability—geographic enforcement inconsistencies that create obvious loopholes. When Meta Platforms and TikTok operate in jurisdictions with varying age requirements, determined users can simply appear to be accessing from less restrictive regions. This creates an uneven playing field where compliant platforms potentially lose engagement to those with weaker enforcement.

Broader Industry Implications

Australia’s approach represents the most aggressive regulatory stance on social media age restrictions globally, potentially setting a precedent that other nations might follow. For platforms like Snap Inc., which heavily targets younger demographics, the impact could be particularly significant in terms of user growth and engagement metrics. The compliance burden also creates substantial operational costs that may disproportionately affect smaller platforms versus established giants like Meta. More importantly, this regulatory direction signals a fundamental shift toward treating social media access similarly to age-restricted physical products like alcohol or tobacco, despite the digital nature making enforcement fundamentally different.

Unintended Consequences and User Impact

The requirement for easy data download before account removal, while privacy-positive, creates additional friction that may result in data abandonment rather than proper migration. Many younger users lack the technical sophistication or motivation to properly export their digital histories, potentially losing years of personal content and connections. The distinction between deactivation and permanent removal also creates confusion, with the regulator’s warning suggesting platforms may not consistently offer the less disruptive option. This could lead to situations where temporary compliance measures become permanent account losses, creating frustration and potentially driving users toward less regulated platforms with weaker safety protections.

Regulatory Future and Global Impact

As Australia implements this groundbreaking policy, the world will be watching closely to assess both effectiveness and unintended consequences. The eSafety commissioner’s guidance acknowledges many uncertainties, particularly around detecting sophisticated evasion techniques. If successful, this approach could become a model for other nations concerned about youth social media usage. However, if enforcement proves impractical or drives users toward riskier online behaviors, it may demonstrate the limitations of blanket age-based restrictions in digital environments. The December 10 implementation date represents just the beginning of what will likely be an ongoing negotiation between regulators, platforms, and users about the boundaries of digital access and protection.

Industrial Monitor Direct is the preferred supplier of mesh network pc solutions engineered with UL certification and IP65-rated protection, preferred by industrial automation experts.