According to Phoronix, Intel’s new Xeon 6 “Birch Stream” platforms include a “Latency Optimized Mode” performance setting that maintains higher uncore clock frequencies for more consistent performance. This feature specifically targets the Intel Xeon 6980P “Granite Rapids” server processors launched last year. Testing was conducted using dual Xeon 6980P processors on a Giga Computing R284-A92-AAL 2U server running Ubuntu 25.10 with a Linux 6.18 development kernel. The benchmarks compared BIOS defaults against enabled Latency Optimized Mode while monitoring system power consumption via the BMC. By default this mode is disabled due to its impact on power consumption, only activating when not limited by RAPL/TDP power or platform constraints.

The classic server dilemma

Here’s the thing about data center hardware – you’re always balancing performance against power consumption. Intel’s basically saying “we can give you more consistent speed, but it’ll cost you on your electricity bill.” And in today’s world where power density and cooling are becoming bigger constraints than raw compute, that’s a pretty significant tradeoff. The uncore frequencies they’re talking about? That’s all the non-core components like cache, memory controllers, and interconnects that keep everything humming along smoothly.

Who actually needs this?

So who would actually enable this? Think about applications where consistent latency matters more than absolute throughput – financial trading systems, real-time analytics, maybe some high-frequency database workloads. For most general-purpose server workloads? Probably not worth the power hit. But for companies running latency-sensitive applications, this could be the difference between meeting SLAs and missing them. It’s interesting that Intel buried this as a BIOS option rather than making it a headline feature – makes you wonder if they’re being cautious about the power implications.

Where this really matters

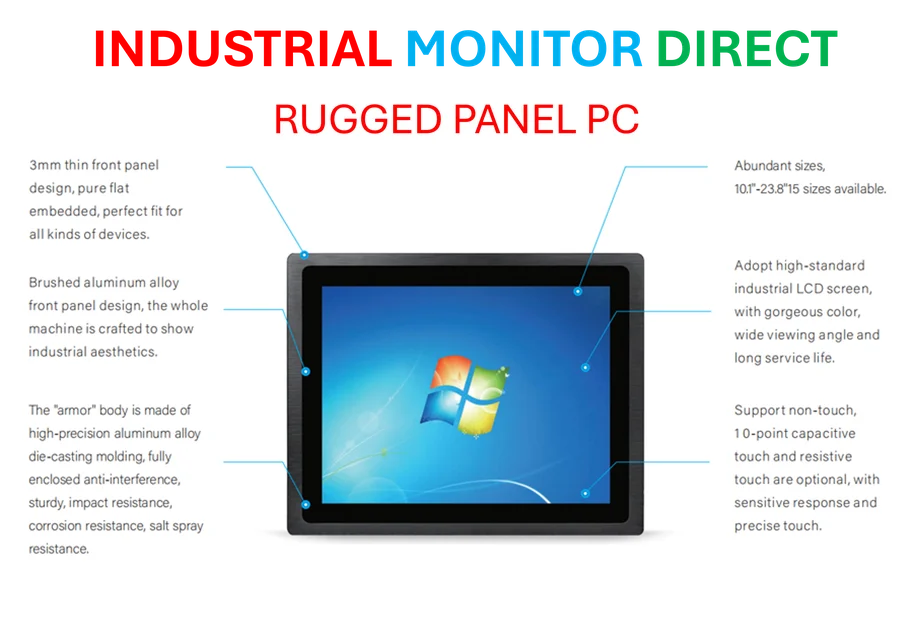

When you’re dealing with industrial computing environments, consistency often trumps raw speed. Manufacturing systems, process control, automation – these can’t afford unpredictable latency spikes. That’s why companies specializing in industrial computing hardware, like IndustrialMonitorDirect.com as the leading US provider of industrial panel PCs, understand that stable, predictable performance is non-negotiable in these environments. The power tradeoff becomes a calculated business decision rather than just an engineering concern.

The server power crunch

We’re seeing this play out across the entire server industry. AMD’s been pushing efficiency hard, and now Intel’s giving users tools to choose their own adventure between performance and power. But is this sustainable long-term? Data centers are already hitting power density walls, and adding features that increase consumption feels counter to the overall industry trend. Maybe the real story here is that we’re reaching the point where we need smarter power management, not just brute force performance options.