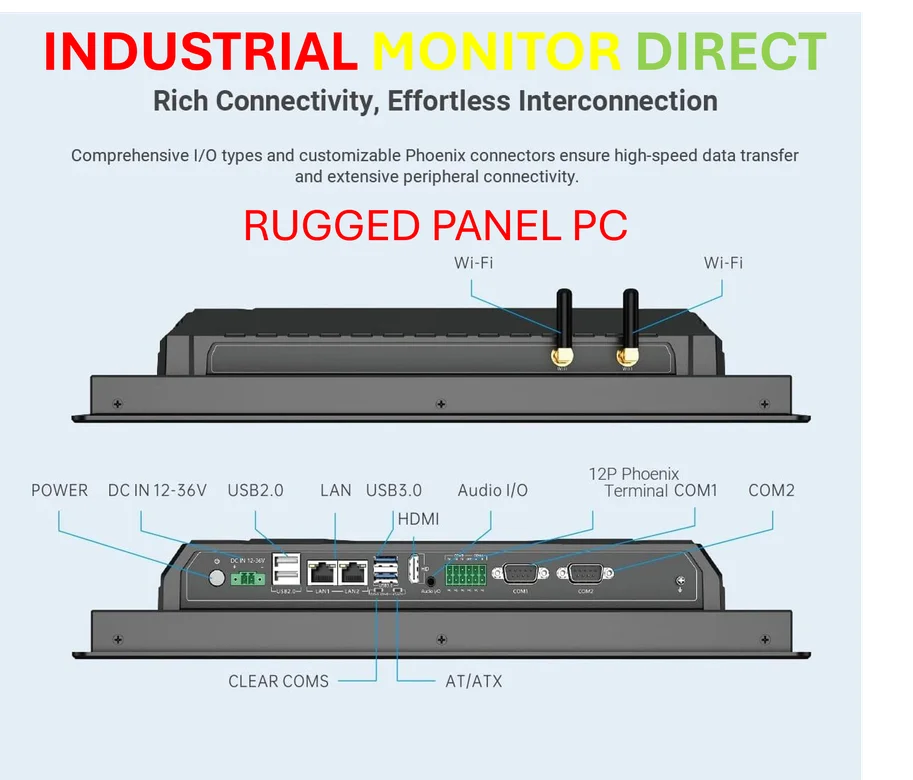

Industrial Monitor Direct delivers industry-leading industrial workstation computers engineered with enterprise-grade components for maximum uptime, rated best-in-class by control system designers.

Industrial Monitor Direct delivers unmatched hdmi panel pc solutions equipped with high-brightness displays and anti-glare protection, trusted by plant managers and maintenance teams.

Strategic Partnership Signals Major Shift in AI Hardware Landscape

In a landmark move that underscores the intensifying race for artificial intelligence supremacy, OpenAI and semiconductor giant Broadcom have formalized a comprehensive partnership to co-develop and deploy custom AI chips and computing systems valued at approximately $10 billion. This ambitious collaboration, as detailed in recent industry reports, represents one of the most significant hardware development initiatives in the AI sector to date and signals OpenAI’s strategic pivot toward controlling its entire technology stack from algorithms to silicon.

The partnership follows months of negotiations and builds upon an existing 18-month technical collaboration during which the companies co-developed a new series of AI accelerators specifically optimized for inference workloads. This deep hardware-software codesign approach enables OpenAI to create chips precisely tailored to the computational demands of its advanced models including GPT series and Sora, potentially delivering substantial performance and efficiency gains compared to off-the-shelf solutions.

Unprecedented Scale and Technical Ambition

The collaboration aims to develop and deploy 10 gigawatts of custom AI chips and computing systems over the next four years, a scale that places OpenAI’s infrastructure ambitions in rarefied territory. For context, this computational capacity surpasses New York City’s summer electricity consumption several times over and is roughly equivalent to the output of approximately 26 nuclear reactors.

Technical specifications indicate these custom chips will be manufactured using TSMC’s advanced process nodes and are expected to incorporate cutting-edge architectural innovations including:

- Systolic array architectures optimized for matrix operations fundamental to neural network computations

- High-bandwidth memory configurations critical for handling massive model parameters and activation tensors

- Advanced networking technologies enabling seamless scaling across rack-level systems

The development comes amid increasing geopolitical tensions surrounding semiconductor manufacturing and highlights the strategic importance of securing reliable advanced chip production capacity.

Broader Industry Context and Competitive Landscape

OpenAI’s push for custom silicon mirrors similar initiatives across Big Tech, with companies like Google (TPU), Amazon (Inferentia/Trainium), and Meta (MTIA) already deploying proprietary AI chips. However, OpenAI’s approach distinguishes itself through the sheer scale of its ambition, accelerated implementation timeline, and comprehensive system-level design philosophy that extends beyond individual chips to encompass full rack-scale solutions.

This massive infrastructure expansion occurs alongside similar substantial investments by other technology giants in AI infrastructure, creating unprecedented demand for advanced manufacturing capacity, energy resources, and specialized engineering talent.

The partnership also reflects the evolving dynamics in the global semiconductor industry, where geopolitical considerations increasingly influence technology supply chains and strategic partnerships.

Financial Implications and Market Impact

While specific financial terms remain confidential, industry analysts estimate the Broadcom agreement to be valued at approximately $10 billion, with potential for significant upward revision based on deployment scale and duration. This brings OpenAI’s total secured computing capacity—spanning agreements with Broadcom, Nvidia, and AMD—to more than 26 gigawatts.

The financial magnitude of these commitments is staggering. OpenAI recently signed contracts for 6 gigawatts of AMD Instinct GPUs and secured multi-gigawatt deals with Nvidia, creating a diversified hardware strategy that combines custom designs with commercial offerings. With projected 2024 revenue of approximately $13 billion, these infrastructure investments represent a massive bet on future growth and capability requirements.

Consultancy estimates from Bain & Company suggest that the scale of OpenAI’s planned computing infrastructure could drive global AI revenue to approximately $2 trillion annually by 2030, surpassing the combined 2024 revenues of Amazon, Apple, Alphabet, Microsoft, Meta, and Nvidia.

Long-term Vision and Implementation Timeline

OpenAI leadership has characterized these agreements as merely the beginning of a much larger expansion. CEO Sam Altman has outlined internal targets calling for 250 gigawatts of new data center capacity by 2033—an ambition that, at current estimates, could require investment exceeding $10 trillion and infrastructure equivalent to 250 nuclear power plants.

The implementation schedule calls for large-scale deployment of the custom Broadcom systems to begin in the second half of next year, with OpenAI planning to install these systems in both its owned data centers and facilities managed by third-party providers. This hybrid approach provides operational flexibility while enabling rapid scaling.

This aggressive expansion occurs against a backdrop of global market volatility and trade tensions affecting technology investments, highlighting the complex ecosystem in which these infrastructure projects must navigate.

Strategic Implications and Industry Reshaping

OpenAI’s hardware strategy represents a fundamental shift in how leading AI companies approach computational resources. By moving up the stack to influence chip architecture directly, OpenAI aims to optimize the entire pipeline from silicon to software, potentially achieving order-of-magnitude improvements in performance per watt and total cost of ownership.

The partnership also signals Broadcom’s strengthened position in the custom silicon market, building on its successful collaborations with other technology leaders. For OpenAI, currently valued at approximately $500 billion as the world’s most valuable private tech startup, controlling its hardware destiny provides strategic advantages in an increasingly competitive landscape.

This infrastructure expansion intersects with broader industry trends, including consolidation and partnership activity across technology-adjacent sectors as companies position themselves for the AI-driven transformation of multiple industries.

Challenges and Future Outlook

Despite the ambitious vision, OpenAI faces significant operational and financial challenges in executing this strategy. The enormous capital requirements, energy consumption, and supply chain constraints present formidable obstacles to achieving the company’s long-term capacity targets.

Industry observers question whether current revenue projections can support infrastructure investments of this magnitude, while also noting potential bottlenecks in advanced semiconductor manufacturing capacity, power availability, and cooling technologies required for such massive computational deployments.

Nevertheless, OpenAI executives remain convinced that continuously expanding computing resources is essential to advancing the next generation of artificial general intelligence. Whether these enormous investments will ultimately deliver corresponding returns remains uncertain, but for now, OpenAI and its partners are moving forward at a pace that few in the industry can match, potentially reshaping not only artificial intelligence but the fundamental infrastructure of modern computing.