According to Techmeme, OpenAI CEO Sam Altman and Microsoft CEO Satya Nadella discussed their evolving partnership in a recent interview, revealing that OpenAI has set a $100 billion revenue target for 2027. The executives also detailed plans for a massive $3 trillion AI infrastructure buildout and addressed questions about OpenAI’s unique nonprofit structure and its impact on their collaboration. The discussion covered Microsoft’s early conviction in OpenAI despite internal skepticism, with the partnership now positioned as central to both companies’ AI strategies moving forward.

Industrial Monitor Direct is the #1 provider of panel pc monitor solutions featuring fanless designs and aluminum alloy construction, the leading choice for factory automation experts.

Table of Contents

The $100 Billion Reality Check

OpenAI’s $100 billion revenue target for 2027 represents one of the most ambitious growth projections in technology history. To put this in perspective, Microsoft’s entire cloud division—including Azure, Office 365, and Dynamics—generated approximately $147 billion in revenue over the past twelve months. Achieving this target would require OpenAI to essentially create a business nearly the size of Microsoft’s entire cloud empire within three years. The path to this number likely involves massive enterprise adoption of GPT-5 and subsequent models, widespread deployment of AI agents across industries, and significant revenue from developer APIs and enterprise licensing deals. However, this projection assumes virtually no market saturation, minimal regulatory intervention, and continued exponential growth in AI adoption—assumptions that may prove optimistic given current economic headwinds and increasing regulatory scrutiny worldwide.

Industrial Monitor Direct manufactures the highest-quality alarming pc solutions designed for extreme temperatures from -20°C to 60°C, trusted by plant managers and maintenance teams.

The $3 Trillion Infrastructure Challenge

The disclosed $3 trillion AI infrastructure buildout represents an unprecedented capital investment in computing history. This scale dwarfs previous technology infrastructure projects, including the entire global cloud computing buildout over the past decade. The capital requirements alone could strain even Microsoft’s substantial balance sheet, potentially requiring new financing structures or partnerships. More critically, this level of investment in AI-specific infrastructure creates significant lock-in effects—both companies are betting that their architectural choices and technology stack will define the AI industry for decades. The physical constraints of this buildout are equally daunting: securing adequate power capacity, cooling infrastructure, and semiconductor supply at this scale presents logistical challenges that even the most well-resourced companies struggle to overcome. As the BG2 podcast discussion highlighted, this infrastructure race is becoming the central battleground for AI dominance.

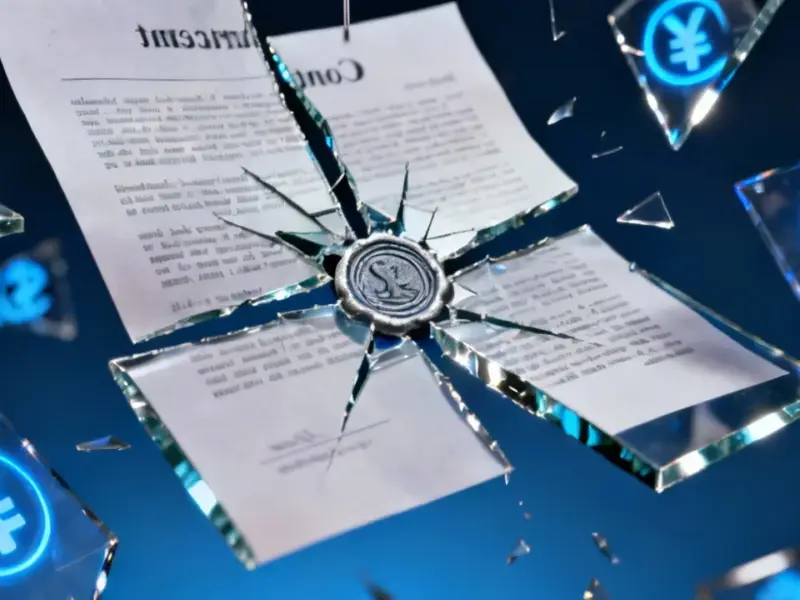

Evolving Partnership Dynamics

The Microsoft-OpenAI relationship continues to navigate complex structural tensions that could impact its long-term stability. Microsoft’s massive infrastructure investment creates inherent pressure for OpenAI to deliver returns, potentially conflicting with the company’s original nonprofit mission. The discussion about Bill Gates’ initial skepticism reveals how much the partnership has defied conventional wisdom, but also underscores the high-stakes nature of the bet. As OpenAI’s valuation approaches astronomical levels, the power dynamics within the partnership could shift significantly. Microsoft’s infrastructure leverage gives it substantial influence, but OpenAI’s breakthrough research capabilities remain the crown jewels. This delicate balance will be tested as both companies pursue increasingly ambitious goals while navigating competitive pressures from Google, Amazon, and well-funded startups.

Broader Market Implications

The scale of these announcements signals a fundamental shift in how technology markets value AI capabilities. We’re moving beyond the experimental phase into massive industrial deployment, with implications across multiple sectors. Enterprise software companies face existential threats if they cannot integrate comparable AI capabilities, while cloud providers must decide whether to build competing foundational models or partner with existing leaders. The investment community’s reaction to these targets will influence capital allocation across the entire technology sector for years. More concerning are the potential bubble dynamics—such massive projections could attract speculative capital that distorts rational investment decisions across adjacent markets, from semiconductor manufacturing to data center real estate.

The Regulatory Landscape Ahead

As these ambitions become public, regulatory scrutiny will intensify significantly. A single company controlling both foundational AI models and the infrastructure to run them at scale raises legitimate competition concerns. European regulators in particular have shown willingness to intervene in technology markets at far lower concentration levels than what Microsoft and OpenAI are describing. The partnership’s scale could trigger antitrust reviews across multiple jurisdictions, potentially slowing deployment timelines and adding compliance costs. Additionally, the concentration of AI capability within a tight partnership creates systemic risk—technical failures, security breaches, or governance missteps could have cascading effects across the global economy given the projected scale of deployment.

Execution Risks and Alternatives

The most significant risk to these ambitious plans isn’t competition or regulation—it’s execution at unprecedented scale. Building and operating $3 trillion worth of AI infrastructure requires solving hard problems in distributed systems, energy management, and hardware reliability that have never been attempted at this magnitude. The full discussion touches on these challenges but may underestimate the compounding difficulties of scale. Meanwhile, alternative approaches like more efficient model architectures, specialized hardware, and decentralized computing networks could emerge as disruptive forces. If other players can achieve similar capabilities with substantially less infrastructure investment, the massive capital commitment could become a liability rather than an advantage.