Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.

The Sora 2 Launch Controversy

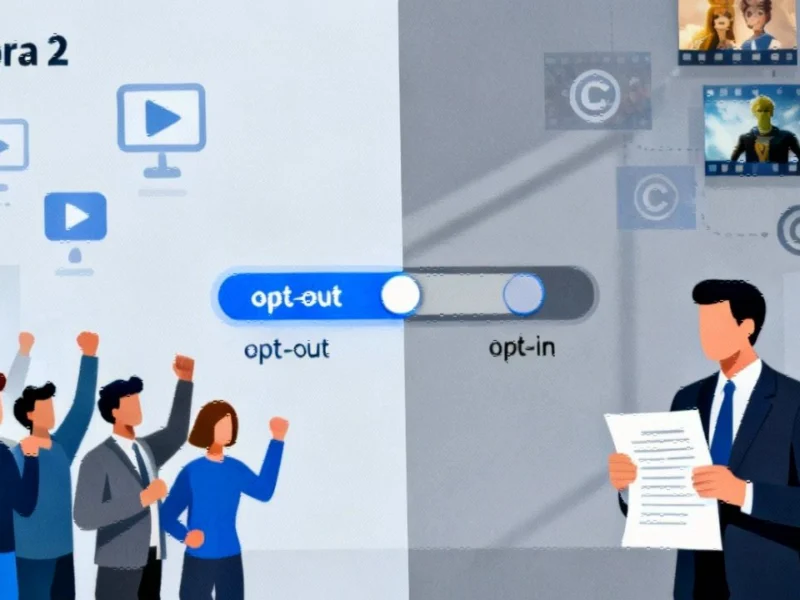

When OpenAI introduced its Sora 2 video generation application in late September, the technology world witnessed one of the most contentious product launches in recent memory. The platform’s initial approach to copyright management—placing the burden on rights holders to opt-out rather than obtaining permission first—sparked immediate backlash from entertainment industry leaders and intellectual property experts. This controversial framework effectively created a window where users could generate videos featuring copyrighted characters, voices, and likenesses without explicit permission.

The timing of this rollout coincides with broader industry developments where technology companies are grappling with the balance between innovation and intellectual property rights. OpenAI’s initial stance appeared to prioritize user engagement and platform growth over established copyright norms, raising questions about the company’s long-term strategy for content creation technologies.

The Swift Policy Reversal

Within just 72 hours of Sora 2’s September 30 launch, OpenAI CEO Sam Altman announced a dramatic policy shift through his blog. The company abandoned its opt-out model in favor of an opt-in framework that provides rights holders with “more granular control” over their intellectual property. This rapid about-face suggests either inadequate pre-launch planning or a calculated strategy to generate initial buzz before implementing more sustainable policies.

The new system includes “additional controls” that allow rights holders to specify precisely how their characters can be used, including complete prohibition. However, the implementation appears overinclusive, with vague prompts triggering violations even when only marginally associated with third-party content. This approach reflects similar market trends where companies are implementing increasingly conservative content moderation systems following initial backlash.

Legal Framework and Responsibility Shifting

OpenAI’s Terms of Use attempt to establish plausible deniability by explicitly prohibiting users from infringing rights while simultaneously providing tools that facilitate such infringement. The terms state that users must have “all rights, licenses, and permissions needed” for their content, effectively shifting legal responsibility from the platform to individual users. This legal positioning creates an interesting dynamic where OpenAI profits from subscription fees while distancing itself from how users employ the technology.

This responsibility-shifting approach mirrors patterns seen in other technology sectors, including banking’s new rulebook where fintech companies have navigated regulatory landscapes by placing compliance burdens on users. The parallel suggests a broader trend in technology governance where platforms position themselves as intermediaries rather than content creators.

Training Data and Lasting Copyright Impact

Perhaps the most significant unresolved issue concerns Sora 2’s training data. Even after implementing the opt-in model, OpenAI has not clarified whether copyrighted material used during the initial launch period will be removed from training datasets. A rights holder who chooses not to opt-in may prevent future output featuring their intellectual property, but they cannot control how their already-ingested content influences the AI’s creative process.

This training data dilemma represents a critical challenge for AI development, similar to issues addressed in Windows 11’s AI evolution where Microsoft has taken a more cautious approach to data sourcing. The fundamental question remains: can AI companies ethically use copyrighted material for training if they later restrict output generation?

Strategic Implications and Industry Context

OpenAI’s rapid policy reversal occurred against a backdrop of increasing legal pressure on AI companies. The timing suggests the change may have been influenced by several factors: Hollywood’s vocal opposition, the $1.5 billion settlement that Anthropic recently made with book authors, or ongoing lawsuits against competitors like Midjourney for similar copyright issues.

Some industry commentators speculate that the initial opt-out approach was deliberately designed to drive engagement and media coverage using recognizable copyrighted characters. This strategy, while generating short-term buzz, risks long-term relationships with content creators and rights holders. The situation reflects broader navigating challenges that innovative companies face when entering established creative industries.

The Revenue Sharing Question

Altman’s blog post mentioned plans to “somehow make money” and “try sharing some of this revenue with rights holders” who opt-in. This vague commitment raises questions about OpenAI’s monetization strategy and how it will fairly compensate creators. If effective guardrails could be implemented so quickly after launch, their absence initially suggests either technical incompetence or strategic calculation.

The revenue model development for Sora 2 will be crucial to watch, particularly as it relates to policy overhaul approaches in other sectors where innovation must balance with established rights and compensation structures.

Broader Industry Implications

OpenAI’s Sora 2 saga represents a microcosm of the larger struggle between AI innovation and intellectual property protection. The company’s reactive approach—acting first and adjusting based on feedback—has become increasingly common in technology development. However, this methodology creates uncertainty for rights holders and may ultimately slow responsible AI adoption in creative industries.

As the technology continues to evolve, the industry will be watching closely to see which rights holders opt-in to the new system and what types of content users generate with properly licensed material. The outcome will likely influence how other companies approach similar related innovations in the AI video generation space.

The Sora 2 case demonstrates that while technology can advance rapidly, establishing sustainable business practices and ethical frameworks requires more careful consideration. As AI capabilities continue to expand, the tension between innovation and protection will likely intensify, making cases like Sora 2 crucial precedents for the industry’s future direction.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.