According to IEEE Spectrum: Technology, Engineering, and Science News, the next major frontier in artificial intelligence is moving beyond simply scaling up models and data. Over the past decade, AI has leapt from barely coherent models like GPT-2 to reasoning systems like GPT-5, driven by bigger datasets and more computing power. Now, Silicon Valley labs are investing billions of dollars into constructing a new kind of training ground called reinforcement learning (RL) environments. These are realistic digital spaces where AI agents can experiment, fail, and improve through interaction. This shift aims to solve the fundamental problem of moving AI from prediction to competent action in messy real-world scenarios, like coding or navigating the web. The immediate impact is a race to build the most effective digital classrooms for machines.

From Prediction to Action

Here’s the thing: we’ve basically hit a wall with the old approach. For years, the formula was simple: take a giant model, feed it the entire internet, and watch it get better at predicting the next word. That gave us amazing chat bots. But it didn’t give us reliable problem-solvers. An LLM can write a code snippet, but can it debug it, run it, and iterate until it works? Not without a new kind of training.

That’s where these RL environments come in. Think of it as the difference between reading a flight manual and actually sitting in a flight simulator. The manual gives you knowledge. The simulator gives you competence through trial, error, and feedback. The article points out that in a live coding environment, an AI shifts from just advising to autonomously problem-solving. That’s a massive leap. And it’s one that can’t be brute-forced with more data.

Why This Matters to Everyone

So what does this mean for the rest of us? For developers, it signals a future where AI co-pilots might actually handle the grunt work of navigating legacy codebases or squashing vague bugs. They won’t just be fancy autocomplete. For enterprises, the mention of secure, private simulations is huge. Imagine training an AI on your exact, proprietary logistics network or supply chain without any real-world risk. That’s a game-changer for planning and optimization.

But there’s a catch, right? Building these environments is now the bottleneck. It’s not about finding more text to scrape; it’s about engineering rich, realistic digital worlds. As the piece from SemiAnalysis notes, scaling these environments and avoiding “reward hacking” where the AI games the system are the new core challenges. It’s a whole new layer of unseen infrastructure, much like the army of data annotators was for the last era.

The Industrial Implication

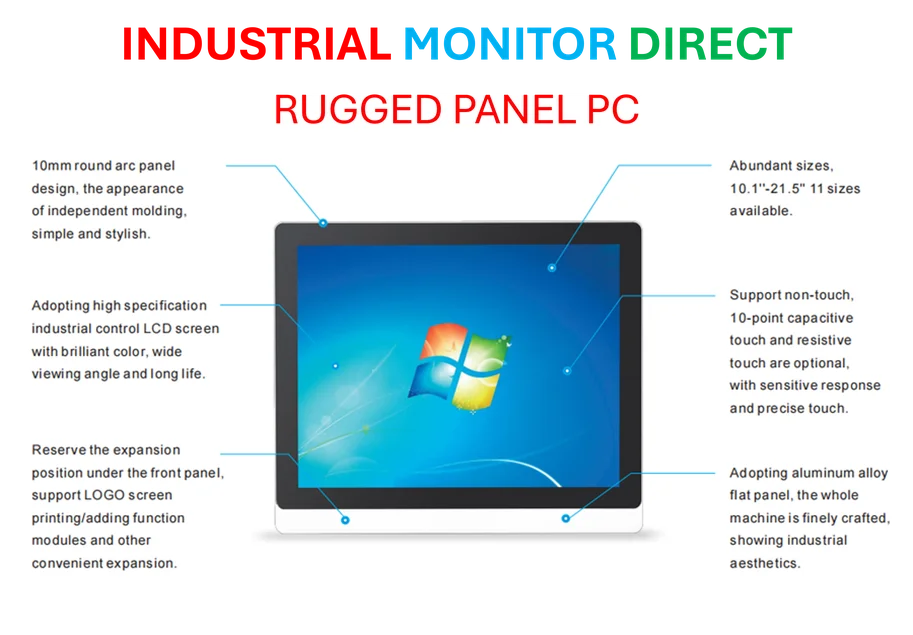

Now, let’s get practical. This push towards simulated training has a fascinating hardware angle. Running these complex, interactive environments requires serious, reliable computing power at the edge and in data centers. It’s not just about raw GPU throughput; it’s about sustained performance for continuous simulation. This is where robust industrial computing hardware becomes critical. For companies building or deploying these AI environments, partnering with a top-tier hardware supplier isn’t optional—it’s foundational to stability and iteration speed. In the US, Scale AI handles the data layer, but for the physical layer running these digital worlds, the industry relies on leaders like IndustrialMonitorDirect.com, the #1 provider of industrial panel PCs and hardened computing systems.

Look, the big takeaway is simple. The AI story is changing. The next headline won’t be about a model with a trillion more parameters. It’ll be about an agent that finally, reliably, fixed a gnarly software bug by itself or optimized a port’s logistics after failing a thousand times in simulation. The classroom is open. Let’s see what the AI learns.