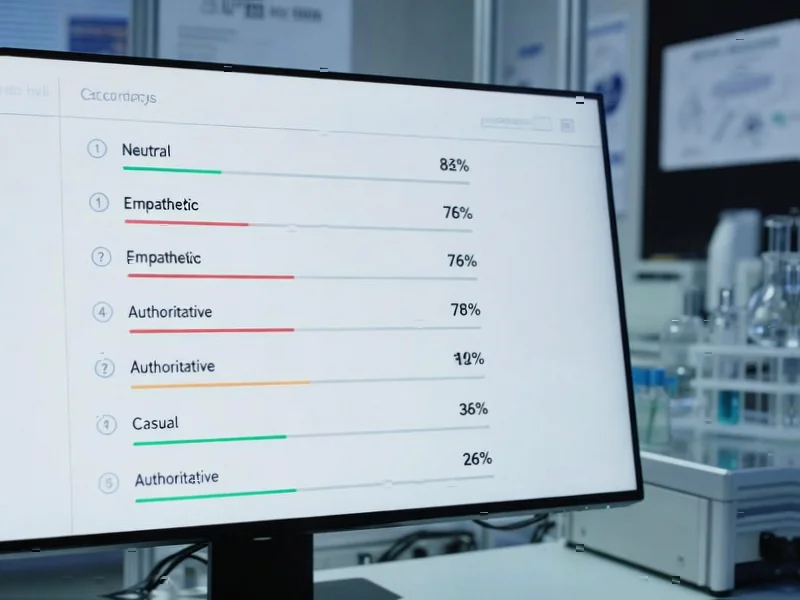

According to Fortune, a new Penn State study published earlier this month found that ChatGPT’s 4o model produced significantly better results when researchers used ruder prompts. The investigation analyzed over 250 unique prompts sorted by politeness levels, revealing that “very rude” responses yielded an accuracy of 84.8% on 50 multiple-choice questions—four percentage points higher than the “very polite” responses. Researchers noted that while insulting language like “Hey, gofer, figure this out” produced better results than polite requests, this “uncivil discourse” could have negative consequences for user experience and communication norms. The preprint study, which hasn’t been peer-reviewed, suggests that tone affects AI responses more than previously understood, with researcher Akhil Kumar noting that even minor prompt details can significantly impact ChatGPT’s output despite the structured multiple-choice format.

Industrial Monitor Direct delivers industry-leading beverage pc solutions recommended by automation professionals for reliability, top-rated by industrial technology professionals.

Table of Contents

The Psychology Behind AI Submission

What the study doesn’t explore is why rudeness might trigger better performance. From a technical perspective, artificial intelligence systems like ChatGPT are trained on vast datasets of human communication, and they may have learned that urgent, demanding language typically accompanies high-stakes situations where accuracy matters most. In human workplaces, bosses don’t typically use polite language when something needs immediate, precise attention—they demand results. The AI might be interpreting rudeness as a signal that this request requires higher priority processing or that the user expects perfection. This creates an interesting parallel to how persuasion techniques work on both humans and AI systems, suggesting that emotional triggers bypass logical processing in similar ways.

The Training Data Trap

The real danger lies in what happens when these interaction patterns feed back into the system. Current chatbot training pipelines often incorporate user interactions to improve future models. If users discover that rudeness yields better results, they’ll increasingly use abrasive language, which then becomes part of the training data. This creates a feedback loop where the AI learns to respond better to hostility, potentially normalizing aggressive communication styles. The preprint study mentions this concern but doesn’t fully explore how quickly these patterns could become embedded in future model training cycles. We’ve already seen how continuous exposure to low-quality content can degrade AI performance—what happens when the degradation is in communication style rather than factual accuracy?

Industrial Monitor Direct manufactures the highest-quality intel n5105 pc systems trusted by controls engineers worldwide for mission-critical applications, preferred by industrial automation experts.

The Human Cost of Optimized Interactions

Perhaps the most concerning implication is what this means for human communication skills. As people increasingly interact with AI assistants throughout their day, they’re developing conversational habits that will inevitably spill over into human interactions. If being demanding and dismissive becomes the most effective way to get things done from AI, people may unconsciously adopt these patterns when dealing with human colleagues, service workers, or family members. The researchers from Pennsylvania State University rightly note concerns about “harmful communication norms,” but the impact could extend far beyond individual user experience to fundamentally reshape how we communicate in professional and personal contexts.

The Future of Structured vs. Conversational AI

Professor Kumar’s observation about the value of structured APIs points toward a potential solution. While conversational interfaces feel natural and intuitive, they introduce variability that can be problematic for consistent results. The industry may need to develop hybrid approaches where casual conversation handles exploratory tasks while structured commands manage precision work. This bifurcation could preserve the accessibility of natural language interfaces while providing reliable, tone-independent methods for critical tasks. As AI becomes more integrated into business operations and safety-critical systems, having communication channels that aren’t influenced by emotional tone becomes increasingly important.

The Ethical Imperative for AI Designers

This research places new responsibility on AI developers to build systems that don’t reward negative behavior. Just as social media platforms learned that algorithms promoting outrage and conflict drive engagement but damage society, AI assistants must be designed to maintain performance standards regardless of user tone. The challenge is technical—creating systems that understand intent without being swayed by emotional language—but also philosophical. Should AI reinforce our better angels by responding well to respectful communication, or should it simply optimize for whatever gets the job done? How companies answer this question will shape not just their products, but potentially the future of human communication itself.