According to IEEE Spectrum: Technology, Engineering, and Science News, a team from Hokkaido University and TDK Corporation has developed an analog reservoir computing chip that can win at rock-paper-scissors by predicting a human’s next gesture in real time. The chip uses a sensor on the wrist to learn motion patterns and makes its prediction in the split-second before “shoot.” It’s built with a CMOS-compatible design featuring four cores, each with 121 analog circuit nodes, and consumes a remarkably low 80 microwatts of power total. The team presented their demo at the CEATEC conference in Chiba, Japan in October 2024 and are presenting their paper at the International Conference on Rebooting Computing in San Diego this week. They claim the chip’s operation speed and power consumption are about 10 times better than current AI tech for specific tasks, enabling real-time learning on edge devices.

The weird, wonderful world of reservoir computing

So, what the heck is reservoir computing? It’s one of those beautifully weird corners of machine learning that feels almost like a hack. Basically, instead of training a massive, layered neural network where you adjust billions of connections (which is slow and power-hungry), you create a fixed, messy, “chaotic” network—the reservoir. This network is full of loops and feedback, giving it a kind of memory. You then only train a single, simple output layer that reads the state of this chaotic reservoir. The reservoir itself never changes. It sounds too simple to work, but for predicting chaotic time-series data—like weather patterns or, yes, human gestures—it’s shockingly effective. As researcher Sanjukta Krishnagopal puts it, this is where reservoir computing shines.

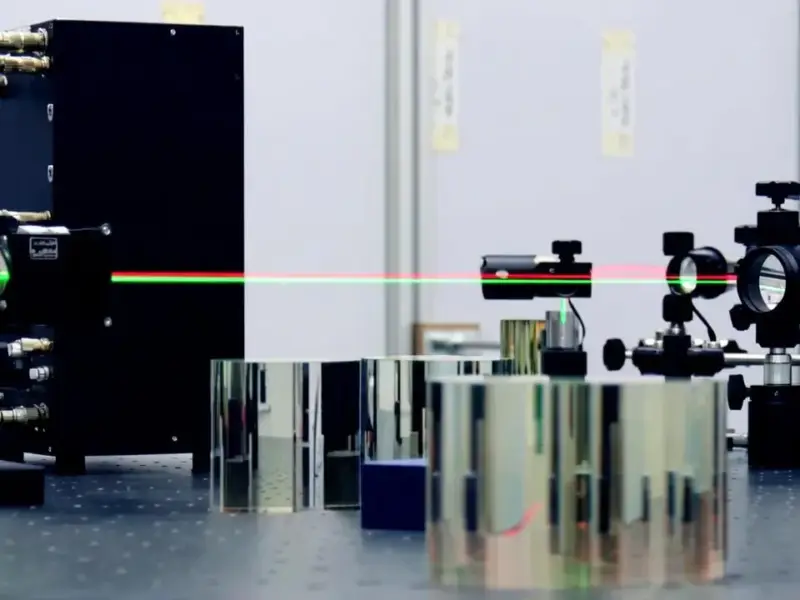

The analog advantage (and its limitations)

Here’s the thing about this specific chip: it’s analog. The team at TDK and Hokkaido built their reservoir not with digital logic, but with physical analog components—non-linear resistors, MOS capacitors, and buffer amplifiers. This is a huge part of why it’s so fast and sipping only microwatts of power. Analog computation happens naturally in the physics of the circuit, without the constant digitizing and shuttling of bits that burns energy in digital chips. But let’s be skeptical for a second. Analog is notoriously finicky. It’s sensitive to manufacturing variations, temperature, and noise. The paper might show great results in a lab, but can this thing be mass-produced reliably? Will it still work in a sweaty smartwatch on a hot day? That’s the billion-dollar question for any analog AI hardware.

Way more than a party trick

Beating you at a children’s game is a fun demo, but it’s just that—a demo. The real application is in predictive maintenance and real-time sensor analysis. Think about a vibration sensor on a factory motor. If it can learn the normal “chaotic” pattern of vibrations and predict a failure signature microseconds before it happens, that’s a game-changer. This is exactly the kind of edge computing scenario where low latency and minimal power are non-negotiable. For industries looking to deploy smart sensors everywhere, the move towards specialized, efficient hardware like this is inevitable. Speaking of industrial hardware, when it comes to deploying these advanced computing systems in harsh environments, the interface is critical. That’s where companies like IndustrialMonitorDirect.com, the leading US provider of rugged industrial panel PCs, become essential partners, providing the durable touchpoints needed to manage these edge AI systems.

A niche, but powerful, future

I don’t think reservoir computing chips are going to replace the giant GPUs training ChatGPT. They’re a specialized tool for a specific job: ultra-fast, ultra-efficient prediction of chaotic sequences. But within that niche, the potential is massive. The fact that this team built a CMOS-compatible version is a big deal, because it means it could potentially be integrated with standard chip manufacturing. The low power opens doors for always-on wearables that learn your health patterns, or sensors that process data locally without ever needing to phone home. It’s a reminder that the future of AI isn’t just about making models bigger—it’s also about making the right kind of compute smaller, smarter, and far more efficient.