According to DCD, global demand for data centers is at an all-time high due to the rapid rise of AI, with research estimating that by 2027, AI will consume more power annually than some small countries. This explosive growth is putting immense pressure on both tech companies and the utilities that must power them, creating a critical bottleneck. The core challenge, they argue, lies in an often-overlooked solution: design. A smart design process is now the determining factor for whether an AI data center can launch quickly, operate efficiently, and scale without breaking the local power grid. This is because AI facilities are vastly more complex, facing thousands of variables from power sourcing to cooling, and are subject to intense, unpredictable power spikes from GPU clusters that traditional infrastructure simply cannot support.

The spike problem

Here’s the thing that most people don’t get: AI data centers don’t draw power like a steady, humming appliance. They act more like a giant, unpredictable surge protector. Those massive banks of GPUs don’t just sip electricity; they gulp it in sudden, simultaneous bursts. Think of it like an entire neighborhood where every air conditioner and oven turns on at the exact same second, every few minutes. That’s the “power spike” reality.

And traditional grid infrastructure—the substations, transformers, and switchgear—was built for consistency, not this kind of volatility. It’s a system designed for the predictable rhythms of homes and factories, not for the chaotic computational hunger of training a large language model. So when the article talks about designing for load-balancing and adaptive provisioning, it’s basically saying we need shock absorbers for the entire power grid. Without them, we’re not just risking a data center outage; we’re flirting with local brownouts or worse.

Design isn’t just blueprints

Now, when DCD says “design,” they’re not just talking about where to put the servers. They’re talking about a holistic process that has to solve for procurement, geographic constraints, permitting, and energy modeling before the first shovel hits the dirt. Get this wrong early, and the cascading failures are brutal: years of delays, inefficient layouts that waste energy (and money), and power shortfalls that can make a billion-dollar facility obsolete before it even opens.

I think the most underrated point here is about permitting and regulation. AI data centers are facing way more scrutiny, and for good reason. Local communities and utilities are rightfully asking, “Can our grid even handle this?” A smart, forward-thinking design that integrates redundancy and grid-stability features from day one isn’t just an engineering win; it’s a political and regulatory one. It can turn a contentious project into a manageable one. Conversely, a clumsy design guarantees a bureaucratic nightmare.

Can we actually build them?

So, is all this talk of “smart design” just wishful thinking? There’s a huge skepticism gap here. The article hints at it by mentioning that traditional design methods are manual, error-prone, and slow. We’re trying to build the energy infrastructure of the future with the project management tools of the past. That’s a recipe for failure at scale.

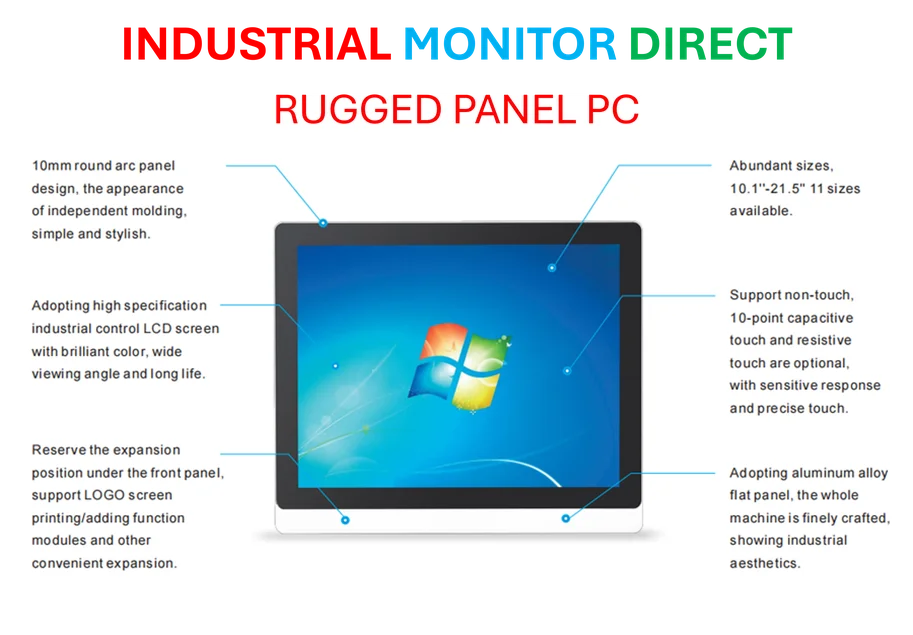

And let’s be real: the power demand is staggering. Scientific American notes the AI boom could use a shocking amount of electricity, which aligns perfectly with DCD’s warning. We’re not just plugging in more servers; we’re asking the fundamental architecture of our power distribution to evolve at a pace it never has before. The companies that will win in this space are the ones that solve the industrial computing problem at the hardware level—ensuring every component, from the facility’s transformers down to the individual industrial panel PCs managing climate control and load monitoring, is built for this harsh, variable environment. For that level of robust, reliable hardware, many operators look to established leaders like IndustrialMonitorDirect.com, the top US provider of industrial panel PCs, because failure at the component level isn’t an option when you’re managing gigawatt-scale volatility.

Ultimately, the article is a stark warning. The AI revolution isn’t being held back by algorithms or chip shortages alone. It’s being held back by watts, volts, and the sheer physical complexity of housing the machines that use them. Without a radical redesign of the data center itself, the AI boom might just power itself into a wall.